I was a happy user of Del.icio.us for many years until the service closed. Then I moved my links to Google Bookmarks, which offered basically the same functionality (at least for my needs): link storage with title, tags and comments. I’ve carefully tagged and filed more than 2500 links since I started, and I’ve learnt to appreciate the usefulness of searching by tag to find again some precious information that was valuable to me in the past. Welcome to JackpotCity Online Casino - slots-online-canada.ca/review/jackpot-city-casino/, one of the oldest and greatest digital casinos in the world.

Google Bookmarks is a very old and simple service that “just works”. Sometimes it looked as if Google had just forgotten about it and let it run for years without anybody noticing… until now. It’s closing on September 2021.

I didn’t want to lose all my links, still need a link database searchable by tags and don’t want to be locked-in again in a similar service that might close in some years, so I wrote my own super-simple alternative to it. It’s called bs, sort of bookmark search.

The usage can’t be simpler, just add the tag you want to look for and it will print a list of links that have that tag:

$ bs webassembly title = Canvas filled three ways: JS, WebAssembly and WebGL | Compile url = https://compile.fi/canvas-filled-three-ways-js-webassembly-and-webgl/ tags = canvas,graphics,html5,wasm,webassembly,webgl date = 2020-02-18 16:48:56 comment = title = Compiling to WebAssembly: It’s Happening! ★ Mozilla Hacks – the Web developer blog url = https://hacks.mozilla.org/2015/12/compiling-to-webassembly-its-happening/ tags = asm.js,asmjs,emscripten,llvm,toolchain,web,webassembly date = 2015-12-18 09:14:35 comment =

If you call the tools without parameters, it will prompt data to insert a new link or edit it if the entered url matches a preexisting one:

$ bs url: https://compile.fi/canvas-filled-three-ways-js-webassembly-and-webgl/ title: Canvas filled three ways: JS, WebAssembly and WebGL | Compile tags: canvas,graphics,html5,wasm,webassembly,webgl comment:

The data is stored in an sqlite database and I’ve written some JavaScript snippets to import the Delicious exported bookmarks file and the Google Bookmarks exported bookmarks file. Those snippets are meant to be copypasted in the JavaScript console of your browser while you have the exported bookmarks html file open on it. They’ll generate SQL sentences that will populate the database for the first time with your preexisting data.

By now the tool doesn’t allow to delete bookmarks (I haven’t had the need yet) and I still need to find a way to simplify its usage through the browser with a bookmarklet to ease adding new bookmarks automatically. But that’s a task for other day. By now I have enough just by knowing that my bookmarks are now safe.

Enjoy!

[UPDATE: 2020-09-08]

I’ve now coded an alternate variant of the database client that can be hosted on any web server with PHP and SQLite3. The bookmarks can now be managed from a browser in a centralized way, in a similar fashion as you could before with Google Bookmarks and Delicious. As you can see in the screenshot, the style resembles Google Bookmarks in some way.

You can easily create a quick search / search engine link in Firefox and Chrome (I use “d” as keyword, a tradition from the Delicious days, so that if I type “d debug” in the browser search bar it will look for that tag in the bookmark search page). Also, the  button opens a popup that shows a bookmarklet code that you can add to your browser bookmark bar. When you click on that bookmarklet, the edit page prefilled with the current page info is opened, so you can insert or edit a new entry.

button opens a popup that shows a bookmarklet code that you can add to your browser bookmark bar. When you click on that bookmarklet, the edit page prefilled with the current page info is opened, so you can insert or edit a new entry.

There’s a trick to use the bookmarklet on Android Chrome: Use a rare enough name for the bookmarklet (I used “+ Bookmark  ”). Then, when you want to add the current page to the webapp, just start typing “+ book”… in the search bar and the saved bookmarklet link will appear as an autocomplete option. Click on it and that’s it.

”). Then, when you want to add the current page to the webapp, just start typing “+ book”… in the search bar and the saved bookmarklet link will appear as an autocomplete option. Click on it and that’s it.

Enjoy++!

por eocanha el August 07, 2021 12:29 PM

A couple of months ago I had the pleasure of speaking at the 43rd International Conference on Software Engineering (aka ICSE 2021), in the context of its “Spanish Industry Case Studies” track. We were invited to give a high level overview of the Chromium project and how Igalia contributes to it upstream.

This was an unusual chance to speak at a forum other than the usual conferences I attend to, so I welcomed this as a double opportunity to explain the project to people less familiar with Chromium than those attending events such as BlinkOn or the Web Engines Hackfest, as well as to spread some awareness on our work in there.

Contributing to Chromium is something we’ve been doing for quite a few years already, but I think it’s fair to say that in the past 2-3 years we have intensified our contributions to the project even more and diversified the areas that we contribute to, something I’ve tried to reflect in this talk in no more than 25 minutes (quite a challenge!). Actually, it’s precisely because of this amount of contributions that we’re currently the 2nd biggest non-Google contributor to the project in number of commits, and among the Top 5 contributors by team size (see a highlight on this from BlinkOn 14’s keynote). For a small consultancy company such as ours, it’s certainly something to feel proud of.

With all this in mind, I organized the talk into 2 main parts: First a general introduction to the Chromium project and then a summary of the main upstream work that we at Igalia have contributed recently to it. I focused on the past year and a half, since that seemed like a good balance that allowed me to highlight the most important bits without adding too much information. And from what I can tell based on the feedback received so far, it seems the end result has been helpful and useful for some people without prior knowledge to understand things such as the differences between Chromium and Chrome, what ChromiumOS is and how our work on several different fronts (e.g. CSS, Accessibility, Ozone/X11/Wayland, MathML, Interoperability…) fits into the picture.

Obviously, the more technically inclined you are, and the more you know about the project, the more you’ll understand the different bits of information condensed into this talk, but my main point here is that you shouldn’t need any of that to be able to follow it, or at least that was my intention (but please let me know in the comments if you have any feedback). Here you have it:

You can watch the talk online (24:05 min) on our YouTube channel, as well as grab the original slide deck as a PDF in case you also want it for references, or to check the many links I included with pointers for further information and also for reference to the different sources used.

Last, I don’t want to finish this post without thanking once again to the organizers for the invitation and for runing the event, and in particular to Andrés-Leonardo Martínez-Ortiz and Javier Provecho for taking care of the specific details involved with the “Spanish Industry Case Studies” track.

Thank you all

por mario el July 22, 2021 02:16 PM

This is the last post of the series showing interesting debugging tools, I hope you have found it useful. Don’t miss the custom scripts at the bottom to process GStreamer logs, help you highlight the interesting parts and find the root cause of difficult bugs. Here are also the previous posts of the series:

When pkg-config finds the PKG_CONFIG_DEBUG_SPEW env var, it explains all the steps used to resolve the packages:

PKG_CONFIG_DEBUG_SPEW=1 /usr/bin/pkg-config --libs x11

This is useful to know why a particular package isn’t found and what are the default values for PKG_CONFIG_PATH when it’s not defined. For example:

Adding directory '/usr/local/lib/x86_64-linux-gnu/pkgconfig' from PKG_CONFIG_PATH Adding directory '/usr/local/lib/pkgconfig' from PKG_CONFIG_PATH Adding directory '/usr/local/share/pkgconfig' from PKG_CONFIG_PATH Adding directory '/usr/lib/x86_64-linux-gnu/pkgconfig' from PKG_CONFIG_PATH Adding directory '/usr/lib/pkgconfig' from PKG_CONFIG_PATH Adding directory '/usr/share/pkgconfig' from PKG_CONFIG_PATH

If we have tuned PKG_CONFIG_PATH, maybe we also want to add the default paths. For example:

SYSROOT=~/sysroot-x86-64

export PKG_CONFIG_PATH=${SYSROOT}/usr/local/lib/pkgconfig:${SYSROOT}/usr/lib/pkgconfig

# Add also the standard pkg-config paths to find libraries in the system

export PKG_CONFIG_PATH=${PKG_CONFIG_PATH}:/usr/local/lib/x86_64-linux-gnu/pkgconfig:\

/usr/local/lib/pkgconfig:/usr/local/share/pkgconfig:/usr/lib/x86_64-linux-gnu/pkgconfig:\

/usr/lib/pkgconfig:/usr/share/pkgconfig

# This tells pkg-config where the "system" pkg-config dir is. This is useful when cross-compiling for other

# architecture, to avoid pkg-config using the system .pc files and mixing host and target libraries

export PKG_CONFIG_LIBDIR=${SYSROOT}/usr/lib

# This could have been used for cross compiling:

#export PKG_CONFIG_SYSROOT_DIR=${SYSROOT}

Sometimes it’s useful to use our own modified/unminified files with a 3rd party service we don’t control. Mitmproxy can be used as a man-in-the-middle proxy, but I haven’t tried it personally yet. What I have tried (with WPE) is this:

/etc/hosts entry to point the host serving the files we want to change to an IP address controlled by us.ResourceRequestBase(const URL& url, ResourceRequestCachePolicy policy)

: m_url(url)

, m_timeoutInterval(s_defaultTimeoutInterval)

...

, m_isAppBound(false)

{

if (m_url.host().toStringWithoutCopying().containsIgnoringASCIICase(String("out-of-control-service.com"))

&& m_url.protocol().containsIgnoringASCIICase(String("https"))) {

printf("### %s: URL %s detected, changing from https to http\n",

__PRETTY_FUNCTION__, m_url.string().utf8().data());

fflush(stdout);

m_url.setProtocol(String("http"));

}

}

:bulb: Pro tip: If you have to debug minified/obfuscated JavaScript code and don’t have a deobfuscated version to use in a man-in-the-middle fashion, use http://www.jsnice.org/ to deobfuscate it and get meaningful variable names.

If your computer has a “shared internet connection” enabled in Network Manager and provides access to a dependent device , you can control the bandwidth offered to that device. This is useful to trigger quality changes on adaptive streaming videos from services out of your control.

This can be done using tc, the Traffic Control tool from the Linux kernel. You can use this script to automate the process (edit it to suit to your needs).

I use these scripts in my daily job to look for strange patterns in GStreamer logs that help me to find the cause of the bugs I’m debugging:

h: Highlights each expression in the command line in a different color.mgrep: Greps (only) for the lines with the expressions in the command line and highlights each expression in a different color.filter-time: Gets a subset of the log lines between a start and (optionally) an end GStreamer log timestamp.highlight-threads: Highlights each thread in a GStreamer log with a different color. That way it’s easier to follow a thread with the naked eye.remove-ansi-colors: Removes the color codes from a colored GStreamer log.aha: ANSI-HTML-Adapter converts plain text with color codes to HTML, so you can share your GStreamer logs from a web server (eg: for bug discussion). Available in most distros.gstbuffer-leak-analyzer: Analyzes a GStreamer log and shows unbalances in the creation/destruction of GstBuffer and GstMemory objects.por eocanha el May 25, 2021 06:00 AM

In this new post series, I’ll show you how both existing and ad-hoc tools can be helpful to find the root cause of some problems. Here are also the older posts of this series in case you find them useful:

If you’re becoming crazy supposing that the program should use some config and it seems to ignore it, just use strace to check what config files, libraries or other kind of files is the program actually using. Use the grep rules you need to refine the search:

$ strace -f -e trace=%file nano 2> >(grep 'nanorc')

access("/etc/nanorc", R_OK) = 0

access("/usr/share/nano/javascript.nanorc", R_OK) = 0

access("/usr/share/nano/gentoo.nanorc", R_OK) = 0

...

First, try to strace -e trace=signal -p 1234 the killed process.

If that doesn’t work (eg: because it’s being killed with the uncatchable SIGKILL signal), then you can resort to modifying the kernel source code (signal.c) to log the calls to kill():

SYSCALL_DEFINE2(kill, pid_t, pid, int, sig)

{

struct task_struct *tsk_p;

...

/* Log SIGKILL */

if (sig & 0x1F == 9) {

tsk_p = find_task_by_vpid(pid);

if (tsk_p) {

printk(KERN_DEBUG "Sig: %d from pid: %d (%s) to pid: %d (%s)\n",

sig, current->pid, current->comm, pid, tsk_p->comm);

} else {

printk(KERN_DEBUG "Sig: %d from pid: %d (%s) to pid: %d\n",

sig, current->pid, current->comm, pid);

}

}

...

}

If you ever find yourself with little time in front of a stubborn build system and, no matter what you try, you can’t get the right flags to the compiler, think about putting something (a wrapper) between the build system and the compiler. Example for g++:

#!/bin/bash

main() {

# Build up arg[] array with all options to be passed

# to subcommand.

i=0

for opt in "$@"; do

case "$opt" in

-O2) ;; # Removes this option

*)

arg[i]="$opt" # Keeps the others

i=$((i+1))

;;

esac

done

EXTRA_FLAGS="-O0" # Adds extra option

echo "g++ ${EXTRA_FLAGS} ${arg[@]}" # >> /tmp/build.log # Logs the command

/usr/bin/ccache g++ ${EXTRA_FLAGS} "${arg[@]}" # Runs the command

}

main "$@"

Make sure that the wrappers appear earlier than the real commands in your PATH.

The make wrapper can also call remake instead. Remake is fully compatible with make but has features to help debugging compilation and makefile errors.

The ISOBMFF Box Structure Viewer online tool allows you to upload an MP4 file and explore its structure.

por eocanha el May 18, 2021 06:00 AM

This is the last post on the instrumenting source code series. I hope you to find the tricks below as useful as the previous ones.

In this post I show some more useful debugging tricks. Don’t forget to have a look at the other posts of the series:

The source code shown below must be placed in the .h where the class to be debugged is defined. It’s written in a way that doesn’t need to rebuild RefCounted.h, so it saves a lot of build time. It logs all refs, unrefs and adoptPtrs, so that any anomaly in the refcounting can be traced and investigated later. To use it, just make your class inherit from LoggedRefCounted instead of RefCounted.

Example output:

void WTF::adopted(WTF::LoggedRefCounted<T>*) [with T = WebCore::MediaSourceClientGStreamerMSE]: this=0x673c07a4, refCount 1 void WTF::adopted(WTF::LoggedRefCounted<T>*) [with T = WebCore::MediaSourceClientGStreamerMSE]: this=0x673c07a4, refCount 1 ^^^ Two adopts, this is not good. void WTF::LoggedRefCounted<T>::ref() [with T = WebCore::MediaSourceClientGStreamerMSE]: this=0x673c07a4, refCount 1 --> ... void WTF::LoggedRefCounted<T>::ref() [with T = WebCore::MediaSourceClientGStreamerMSE]: this=0x673c07a4, refCount ... --> 2 void WTF::LoggedRefCounted<T>::deref() [with T = WebCore::MediaSourceClientGStreamerMSE]: this=0x673c07a4, refCount 2 --> ... void WTF::LoggedRefCounted<T>::deref() [with T = WebCore::MediaSourceClientGStreamerMSE]: this=0x673c07a4, refCount ... --> 1 void WTF::adopted(WTF::LoggedRefCounted<T>*) [with T = WebCore::MediaSourceClientGStreamerMSE]: this=0x673c07a4, refCount 1 void WTF::LoggedRefCounted<T>::deref() [with T = WebCore::MediaSourceClientGStreamerMSE]: this=0x673c07a4, refCount 1 --> ... void WTF::LoggedRefCounted<T>::deref() [with T = WebCore::MediaSourceClientGStreamerMSE]: this=0x673c07a4, refCount 1 --> ... ^^^ Two recursive derefs, not good either.

#include "Logging.h"

namespace WTF {

template<typename T> class LoggedRefCounted : public WTF::RefCounted<T> {

WTF_MAKE_NONCOPYABLE(LoggedRefCounted); WTF_MAKE_FAST_ALLOCATED;

public:

void ref() {

printf("%s: this=%p, refCount %d --> ...\n", __PRETTY_FUNCTION__, this, WTF::RefCounted<T>::refCount()); fflush(stdout);

WTF::RefCounted<T>::ref();

printf("%s: this=%p, refCount ... --> %d\n", __PRETTY_FUNCTION__, this, WTF::RefCounted<T>::refCount()); fflush(stdout);

}

void deref() {

printf("%s: this=%p, refCount %d --> ...\n", __PRETTY_FUNCTION__, this, WTF::RefCounted<T>::refCount()); fflush(stdout);

WTF::RefCounted<T>::deref();

printf("%s: this=%p, refCount ... --> %d\n", __PRETTY_FUNCTION__, this, WTF::RefCounted<T>::refCount()); fflush(stdout);

}

protected:

LoggedRefCounted() { }

~LoggedRefCounted() { }

};

template<typename T> inline void adopted(WTF::LoggedRefCounted<T>* object)

{

printf("%s: this=%p, refCount %d\n", __PRETTY_FUNCTION__, object, (object)?object->refCount():0); fflush(stdout);

adopted(static_cast<RefCountedBase*>(object));

}

} // Namespace WTF

WebProcessMainGtk and WebProcessMainWPE will sleep for 30 seconds if a special environment variable is defined:

export WEBKIT2_PAUSE_WEB_PROCESS_ON_LAUNCH=1

It only works #if ENABLE(DEVELOPER_MODE), so you might want to remove those ifdefs if you’re building in Release mode.

In big pipelines (e.g. playbin) it can be very hard to find what element is replying to a query or handling an event. Even using gdb can be extremely tedious due to the very high level of recursion. My coworker Alicia commented that using log tracers is more helpful in this case.

GST_TRACERS=log enables additional GST_TRACE() calls all accross GStreamer. The following example logs entries and exits into the query function.

GST_TRACERS=log GST_DEBUG='query:TRACE'

The names of the logging categories are somewhat inconsistent:

The log tracer code is in subprojects/gstreamer/plugins/tracers/gstlog.c.

por eocanha el May 11, 2021 06:00 AM

In this post I show some more useful debugging tricks. Check also the other posts of the series:

The thread id is generated by Linux and can take values higher than 1-9, just like PIDs. This thread number is useful to know which function calls are issued by the same thread, avoiding confusion between threads.

#include <stdio.h>

#include <unistd.h>

#include <sys/syscall.h>

printf("%s [%d]\n", __PRETTY_FUNCTION__, syscall(SYS_gettid));

fflush(stdout);

We redefine the GST_OBJECT_LOCK/UNLOCK/TRYLOCK macros to print the calls, compare locks against unlocks, and see who’s not releasing its lock:

#include "wtf/Threading.h"

#define GST_OBJECT_LOCK(obj) do { \

printf("### [LOCK] %s [%p]\n", __PRETTY_FUNCTION__, &Thread::current()); fflush(stdout); \

g_mutex_lock(GST_OBJECT_GET_LOCK(obj)); \

} while (0)

#define GST_OBJECT_UNLOCK(obj) do { \

printf("### [UNLOCK] %s [%p]\n", __PRETTY_FUNCTION__, &Thread::current()); fflush(stdout); \

g_mutex_unlock(GST_OBJECT_GET_LOCK(obj)); \

} while (0)

#define GST_OBJECT_TRYLOCK(obj) ({ \

gboolean result = g_mutex_trylock(GST_OBJECT_GET_LOCK(obj)); \

if (result) { \

printf("### [LOCK] %s [%p]\n", __PRETTY_FUNCTION__, &Thread::current()); fflush(stdout); \

} \

result; \

})

Warning: The statement expression that allows the TRYLOCK macro to return a value will only work on GCC.

There’s a way to know which thread has taken a lock in glib/GStreamer using gdb. First locate the stalled thread:

(gdb) thread (gdb) bt #2 0x74f07416 in pthread_mutex_lock () #3 0x7488aec6 in gst_pad_query () #4 0x6debebf2 in autoplug_query_allocation () (gdb) frame 3 #3 0x7488aec6 in gst_pad_query (pad=pad@entry=0x54a9b8, ...) 4058 GST_PAD_STREAM_LOCK (pad);

Now get the process id (PID) and use the pthread_mutex_t structure to print the Linux thread id that has acquired the lock:

(gdb) call getpid() $30 = 6321 (gdb) p ((pthread_mutex_t*)pad.stream_rec_lock.p)->__data.__owner $31 = 6368 (gdb) thread find 6321.6368 Thread 21 has target id 'Thread 6321.6368'

If you’re using C++, you can define a tracer class. This is for webkit, but you get the idea:

#define MYTRACER() MyTracer(__PRETTY_FUNCTION__);

class MyTracer {

public:

MyTracer(const gchar* functionName)

: m_functionName(functionName) {

printf("### %s : begin %d\n", m_functionName.utf8().data(), currentThread()); fflush(stdout);

}

virtual ~MyTracer() {

printf("### %s : end %d\n", m_functionName.utf8().data(), currentThread()); fflush(stdout);

}

private:

String m_functionName;

};

And use it like this in all the functions you want to trace:

void somefunction() {

MYTRACER();

// Some other code...

}

The constructor will log when the execution flow enters into the function and the destructor will log when the flow exits.

In the C code, just call raise(SIGINT) (simulate CTRL+C, normally the program would finish).

And then, in a previously attached gdb, after breaking and having debugging all you needed, just continue the execution by ignoring the signal or just plainly continuing:

(gdb) signal 0 (gdb) continue

There’s a way to do the same but attaching gdb after the raise. Use raise(SIGSTOP) instead (simulate CTRL+Z). Then attach gdb, locate the thread calling raise and switch to it:

(gdb) thread apply all bt [now search for "raise" in the terminal log] Thread 36 (Thread 1977.2033): #1 0x74f5b3f2 in raise () from /home/enrique/buildroot/output2/staging/lib/libpthread.so.0 (gdb) thread 36

Now, from a terminal, send a continuation signal: kill -SIGCONT 1977. Finally instruct gdb to single-step only the current thread (IMPORTANT!) and run some steps until all the raises have been processed:

(gdb) set scheduler-locking on (gdb) next // Repeat several times...

Just use this macro:

GST_DEBUG_FUNCPTR_NAME(func)

RefCountedLeakCounter is a tool class that can help to debug reference leaks by printing this kind of messages when WebKit exits:

LEAK: 2 XMLHttpRequest LEAK: 25 CachedResource LEAK: 3820 WebCoreNode

To use it you have to modify the particular class you want to debug:

wtf/RefCountedLeakCounter.hDEFINE_DEBUG_ONLY_GLOBAL(WTF::RefCountedLeakCounter, myClassCounter, ("MyClass"));myClassCounter.increment()myClassCounter.decrement()por eocanha el May 04, 2021 06:00 AM

This is the continuation of the GStreamer WebKit debugging tricks post series. In the next three posts, I’ll focus on what we can get by doing some little changes to the source code for debugging purposes (known as “instrumenting”), but before, you might want to check the previous posts of the series:

// File getenv.c

// To compile: gcc -shared -Wall -fPIC -o getenv.so getenv.c -ldl

// To use: export LD_PRELOAD="./getenv.so", then run any program you want

// See http://www.catonmat.net/blog/simple-ld-preload-tutorial-part-2/

#define _GNU_SOURCE

#include <stdio.h>

#include <dlfcn.h>

// This function will take the place of the original getenv() in libc

char *getenv(const char *name) {

printf("Calling getenv(\"%s\")\n", name);

char *(*original_getenv)(const char*);

original_getenv = dlsym(RTLD_NEXT, "getenv");

return (*original_getenv)(name);

}

See the breakpoints with command example to know how to get the same using gdb. Check also Zan’s libpine for more features.

The gobject-list project, written by Thibault Saunier, is a simple LD_PRELOAD library for tracking the lifetime of GObjects. When loaded into an application, it prints a list of living GObjects on exiting the application (unless the application crashes), and also prints reference count data when it changes. SIGUSR1 or SIGUSR2 can be sent to the application to trigger printing of more information.

The usual debugging macros aren’t printing messages? Redefine them to make what you want:

#undef LOG_MEDIA_MESSAGE

#define LOG_MEDIA_MESSAGE(...) do { \

printf("LOG %s: ", __PRETTY_FUNCTION__); \

printf(__VA_ARGS__); \

printf("\n"); \

fflush(stdout); \

} while(0)

This can be done to enable asserts on demand in WebKit too:

#undef ASSERT

#define ASSERT(assertion) \

(!(assertion) ? \

(WTFReportAssertionFailure(__FILE__, __LINE__, WTF_PRETTY_FUNCTION, #assertion), \

CRASH()) : \

(void)0)

#undef ASSERT_NOT_REACHED

#define ASSERT_NOT_REACHED() do { \

WTFReportAssertionFailure(__FILE__, __LINE__, WTF_PRETTY_FUNCTION, 0); \

CRASH(); \

} while (0)

It may be interesting to enable WebKit LOG() and GStreamer GST_DEBUG() macros only on selected files:

#define LOG(channel, msg, ...) do { \

printf("%s: ", #channel); \

printf(msg, ## __VA_ARGS__); \

printf("\n"); \

fflush(stdout); \

} while (false)

#define _GST_DEBUG(msg, ...) do { \

printf("### %s: ", __PRETTY_FUNCTION__); \

printf(msg, ## __VA_ARGS__); \

printf("\n"); \

fflush(stdout); \

} while (false)

Note all the preprocessor trickery used here:

__VA_ARGS__do while(false) is a trick to avoid {braces} and make the code block work when used in if/then/else one-liners#channel expands LOG(MyChannel,....) as printf("%s: ", "MyChannel"). It’s called “stringification”.## __VA_ARGS__ expands the variable argument list as a comma-separated list of items, but if the list is empty, it eats the comma after “msg”, preventing syntax errorsUse typeid(<expression>).name(). Filter the ouput through c++filt -t:

std::vector<char *> v;

printf("Type: %s\n", typeid(v.begin()).name());

If you want to know all the places from where the GstClockTime toGstClockTime(float time) function is called, you can convert it to a template function and use static_assert on a wrong datatype like this (in the .h):

template <typename T = float> GstClockTime toGstClockTime(float time) {

static_assert(std::is_integral<T>::value,

"Don't call toGstClockTime(float)!");

return 0;

}

Note that T=float is different to integer (is_integral). It has nothing to do with the float time parameter declaration.

You will get compile-time errors like this on every place the function is used:

WebKitMediaSourceGStreamer.cpp:474:87: required from here GStreamerUtilities.h:84:43: error: static assertion failed: Don't call toGstClockTime(float)!

Sometimes is useful to know if a particular define is enabled:

#include <limits.h> #define _STR(x) #x #define STR(x) _STR(x) #pragma message "Int max is " STR(INT_MAX) #ifdef WHATEVER #pragma message "Compilation goes by here" #else #pragma message "Compilation goes by there" #endif ...

The code above would generate this output:

test.c:6:9: note: #pragma message: Int max is 0x7fffffff

#pragma message "Int max is " STR(INT_MAX)

^~~~~~~

test.c:11:9: note: #pragma message: Compilation goes by there

#pragma message "Compilation goes by there"

^~~~~~~

por eocanha el April 27, 2021 06:00 AM

This post is a continuation of a series of blog posts about the most interesting debugging tricks I’ve found while working on GStreamer WebKit on embedded devices. These are the other posts of the series published so far:

In some circumstances you may get stacktraces that eventually stop because of missing symbols or corruption (?? entries).

#3 0x01b8733c in ?? () Backtrace stopped: previous frame identical to this frame (corrupt stack?)

However, you can print the stack in a useful way that gives you leads about what was next in the stack:

x/256wa $espx/256ga $rspx/256wa $spYou may want to enable asm-demangle: set print asm-demangle

Example output, the 3 last lines give interesting info:

0x7ef85550: 0x1b87400 0x2 0x0 0x1b87400 0x7ef85560: 0x0 0x1b87140 0x1b87140 0x759e88a4 0x7ef85570: 0x1b87330 0x759c71a9 <gst_base_sink_change_state+956> 0x140c 0x1b87330 0x7ef85580: 0x759e88a4 0x7ef855b4 0x0 0x7ef855b4 ... 0x7ef85830: 0x76dbd6c4 <WebCore::AppendPipeline::resetPipeline()::__PRETTY_FUNCTION__> 0x4 0x3 0x1bfeb50 0x7ef85840: 0x0 0x76d59268 0x75135374 0x75135374 0x7ef85850: 0x76dbd6c4 <WebCore::AppendPipeline::resetPipeline()::__PRETTY_FUNCTION__> 0x1b7e300 0x1d651d0 0x75151b74

More info: 1

Sometimes the symbol names aren’t printed in the stack memdump. You can do this trick to iterate the stack and print the symbols found there (take with a grain of salt!):

(gdb) set $i = 0 (gdb) p/a *((void**)($sp + 4*$i++)) [Press ENTER multiple times to repeat the command] $46 = 0xb6f9fb17 <_dl_lookup_symbol_x+250> $58 = 0xb40a9001 <g_log_writer_standard_streams+128> $142 = 0xb40a877b <g_return_if_fail_warning+22> $154 = 0xb65a93d5 <WebCore::MediaPlayerPrivateGStreamer::changePipelineState(GstState)+180> $164 = 0xb65ab4e5 <WebCore::MediaPlayerPrivateGStreamer::playbackPosition() const+420> ...

Many times it’s just a matter of gdb not having loaded the unstripped version of the library. /proc/<PID>/smaps and info proc mappings can help to locate the library providing the missing symbol. Then we can load it by hand.

For instance, for this backtrace:

#0 0x740ad3fc in syscall () from /home/enrique/buildroot-wpe/output/staging/lib/libc.so.6 #1 0x74375c44 in g_cond_wait () from /home/enrique/buildroot-wpe/output/staging/usr/lib/libglib-2.0.so.0 #2 0x6cfd0d60 in ?? ()

In a shell, we examine smaps and find out that the unknown piece of code comes from libgstomx:

$ cat /proc/715/smaps ... 6cfc1000-6cff8000 r-xp 00000000 b3:02 785380 /usr/lib/gstreamer-1.0/libgstomx.so ...

Now we load the unstripped .so in gdb and we’re able to see the new symbol afterwards:

(gdb) add-symbol-file /home/enrique/buildroot-wpe/output/build/gst-omx-custom/omx/.libs/libgstomx.so 0x6cfc1000 (gdb) bt #0 0x740ad3fc in syscall () from /home/enrique/buildroot-wpe/output/staging/lib/libc.so.6 #1 0x74375c44 in g_cond_wait () from /home/enrique/buildroot-wpe/output/staging/usr/lib/libglib-2.0.so.0 #2 0x6cfd0d60 in gst_omx_video_dec_loop (self=0x6e0c8130) at gstomxvideodec.c:1311 #3 0x6e0c8130 in ?? ()

Useful script to prepare the add-symbol-file:

cat /proc/715/smaps | grep '[.]so' | sed -e 's/-[0-9a-f]*//' | { while read ADDR _ _ _ _ LIB; do echo "add-symbol-file $LIB 0x$ADDR"; done; }

More info: 1

The “figuring out corrupt ARM stacktraces” post has some additional info about how to use addr2line to translate memory addresses to function names on systems with a hostile debugging environment.

There are times when there’s just no way to get debug symbols working, or where we’re simply debugging on a release version of the software. In those cases, we must directly debug the assembly code. The gdb text user interface (TUI) can be used to examine the disassebled code and the CPU registers. It can be enabled with these commands:

layout asm layout regs set print asm-demangle

Some useful keybindings in this mode:

This screenshot shows how we can infer that an empty RefPtr is causing a crash in some WebKit code.

Sometimes, when you continue (‘c’) execution on ARM there’s no way to stop it again unless a breakpoint is hit. But there’s a trick to retake the control: just send a harmless signal to the process.

kill -SIGCONT 1234

Sometimes you need to match threads in the GStreamer logs with threads in a running gdb session. The simplest way is to ask it to GThread for each gdb thread:

(gdb) set output-radix 16 (gdb) thread apply all call g_thread_self()

This will print a list of gdb threads and GThread*. We only need to find the one we’re looking for.

If we have a pointer to the pipeline object, we can call the function that dumps the pipeline:

(gdb) call gst_debug_bin_to_dot_file_with_ts((GstBin*)0x15f0078, GST_DEBUG_GRAPH_SHOW_ALL, "debug")

por eocanha el April 20, 2021 06:00 AM

I’ve been developing and debugging desktop and mobile applications on embedded devices over the last decade or so. The main part of this period I’ve been focused on the multimedia side of the WebKit ports using GStreamer, an area that is a mix of C (glib, GObject and GStreamer) and C++ (WebKit).

Over these years I’ve had to work on ARM embedded devices (mobile phones, set-top-boxes, Raspberry Pi using buildroot) where most of the environment aids and tools we take for granted on a regular x86 Linux desktop just aren’t available. In these situations you have to be imaginative and find your own way to get the work done and debug the issues you find in along the way.

I’ve been writing down the most interesting tricks I’ve found in this journey and I’m sharing them with you in a series of 7 blog posts, one per week. Most of them aren’t mine, and the ones I learnt in the begining of my career can even seem a bit naive, but I find them worth to share anyway. I hope you find them as useful as I do.

You can break on a place, run some command and continue execution. Useful to get logs:

break getenv command # This disables scroll continue messages # and supresses output silent set pagination off p (char*)$r0 continue end break grl-xml-factory.c:2720 if (data != 0) command call grl_source_get_id(data->source) # $ is the last value in the history, the result of # the previous call call grl_media_set_source (send_item->media, $) call grl_media_serialize_extended (send_item->media, GRL_MEDIA_SERIALIZE_FULL) continue end

This idea can be combined with watchpoints and applied to trace reference counting in GObjects and know from which places the refcount is increased and decreased.

Just wait until the if chooses a branch and then jump to the other one:

6 if (i > 3) {

(gdb) next

7 printf("%d > 3\n", i);

(gdb) break 9

(gdb) jump 9

9 printf("%d <= 3\n", i);

(gdb) next

5 <= 3

If you get a warning message like this:

W/GLib-GObject(18414): g_object_unref: assertion `G_IS_OBJECT (object)' failed

the functions involved are: g_return_if_fail_warning(), which calls to g_log(). It’s good to set a breakpoint in any of the two:

break g_log

Another method is to export G_DEBUG=fatal_criticals, which will convert all the criticals in crashes, which will stop the debugger.

If you want to inspect the contents of a GObjects that you have in a reference…

(gdb) print web_settings $1 = (WebKitWebSettings *) 0x7fffffffd020

you can dereference it…

(gdb) print *web_settings

$2 = {parent_instance = {g_type_instance = {g_class = 0x18}, ref_count = 0, qdata = 0x0}, priv = 0x0}

even if it’s an untyped gpointer…

(gdb) print user_data

(void *) 0x7fffffffd020

(gdb) print *((WebKitWebSettings *)(user_data))

{parent_instance = {g_type_instance = {g_class = 0x18}, ref_count = 0, qdata = 0x0}, priv = 0x0}

To find the type, you can use GType:

(gdb) call (char*)g_type_name( ((GTypeInstance*)0x70d1b038)->g_class->g_type ) $86 = 0x2d7e14 "GstOMXH264Dec-omxh264dec"

(gdb) call malloc(sizeof(std::string))

$1 = (void *) 0x91a6a0

(gdb) call ((std::string*)0x91a6a0)->basic_string()

(gdb) call ((std::string*)0x91a6a0)->assign("Hello, World")

$2 = (std::basic_string<char, std::char_traits<char>, std::allocator<char> > &) @0x91a6a0: {static npos = <optimized out>, _M_dataplus = {<std::allocator<char>> = {<__gnu_cxx::new_allocator<char>> = {<No data fields>}, <No data fields>}, _M_p = 0x91a6f8 "Hello, World"}}

(gdb) call SomeFunctionThatTakesAConstStringRef(*(const std::string*)0x91a6a0)

por eocanha el April 13, 2021 10:49 AM

Pedidle a un programador moderno que os diseñe un programa, y pronto os dará un diagrama con clases llamadas

Cliente y Empleado, y Usuario y UsuarioDAO, y ConnectionProvider y ConnectionProviderFactory y

AbstractConnectionProviderFactoryImpl.

Bueno, tal vez sólo los programadores Java se metan en tales fregados, pero está claro que, hoy en día,

cualquier programador conoce y utiliza los conceptos básicos de orientación a objetos. Incluso en lenguajes

no orientados a objetos, los programadores crean y usan structs, o records, o Types o cualesquiera

mecanismos del lenguaje para crear nuevos tipos de datos.

Y, aún así, por muy sabios que nos consideremos los programadores en la actualidad, en nuestros programas todavía

sobreviven vestigios de los viejos tiempos en los que Fortran y COBOL y BASIC sólo nos proporcionaban tipos de datos

primitivos. Cada vez que queremos almacenar una clave primaria de un registro de la base de datos, la ponemos

en una variable de tipo long o string. Si queremos pasarle un timestamp a una función, usamos un

argumento de tipo int o long. ¿Una función que devuelve un UUID? Tipo string. ¿Una cadena de texto

recibida del usuario? ¿Una cadena de texto lista para insertar en un documento HTML? Ambas, tipo string.

Utilizamos los mismos tipos de datos, una y otra vez, para cosas tan diferentes como claves primarias de distintas tablas y timestamps en segundos y milisegundos, o URIs y UUIDs. Ésta es una práctica extremadamente habitual que, también, es una causa de errores extremadamente habitual. ¿Quién no ha escrito nunca código que intenta buscar un registro en la tabla equivocada, o que compara segundos y microsegundos, o que inserta una cadena de texto sin escapar en un documento HTML?

En este artículo os describo tres estrategias para tratar con esos objetos, evitar confusiones como las que describo arriba, y evitar errores de programación.

¿Conocéis el Internet Archive? Es una organización sin ánimo de lucro que quiere formar una “biblioteca de Internet” archivando todas las páginas web del mundo para conservarlas y para que podamos verlas en el futuro.

Podéis suponer que el código fuente del Internet Archive trabaja mucho con URIs. Cada página web tiene su propio URI y contiene otros URIs de todas las páginas web a las que enlaza. En el archivo, las páginas web están indexadas mediante sus URIs para que los usuarios puedan acceder a ellas. En definitiva, para el Internet Archive, los URIs son el pan nuestro de cada día.

Los programadores siempre estamos tentados de almacenar URIs en variables de tipo string. Vemos una cadena

alfanumérica y nos decimos: “eso es fácil: es una cadena de texto, así que tipo string”. Sin embargo, los URIs

no son sólo cadenas de texto. Los URIs tienen ciertas reglas que deben cumplir en todo momento: han de seguir un

cierto formato, ciertos caracteres no son válidos, hay secuencias de escape, etc.

Cuando almacenamos un URI en una variable de tipo string, el sistema no sabe nada de esas reglas. La variable

podría contener un URI mal formateado, o incluso algo que no es un URI en absoluto, y no habría ningún mensaje

de error ni nada parecido hasta que intentásemos usarla y se rompiera algo. Por lo tanto, necesitamos

escribir (y acordarnos de utilizar siempre) código que, cada vez que asignemos un nuevo valor a la variable,

lo verifique y normalice. Si no lo hacemos, nos exponemos a una multitud de posibles errores de programación.

Tal vez no seamos capaz de encontrar la página web que el usuario busca, tal vez tengamos un XSS en nuestra página,

o algún otro problema entre medias.

Para solucionar el problema con más eficacia tenemos que reconocer que un URI no es una cadena de texto que podamos

manipular usando sólo variables de tipo string, sino que tenemos que utilizar una clase específica que codifique

todas las reglas y el formato de un URI. Esta clase URI ha de tener funciones para convertir una cadena de texto

en un objeto de tipo URI, aplicando todas las reglas de validación y normalización, y también necesita tener

funciones para extraer las distintas partes del URI y obtener una representación del URI en forma de cadena de texto.

La mayoría de los lenguajes modernos incluyen una clase URI en su biblioteca estándar, así que sólo tendremos que

acordarnos de utilizarla en lugar del tipo string en cualquier lugar en el que tratemos con URIs.

El Internet Archive, en su página principal, tiene una caja de texto en la que un usuario puede escribir un URI y

ver qué había en esa página en algún momento del pasado. El servidor web recibe la petición web que contiene ese URI

en forma de cadena de caracteres; lo primero que hace el servidor es convertirlo en un objeto de tipo URI. A partir

de ese momento, ese URI está validado y formateado, por lo que el servidor puede pasarlo al servicio de

almacenamiento, que podrá recuperar el contenido de la página web.

A veces no podemos utilizar clases proporcionadas por nuestra biblioteca estándar y tenemos que escribirlas nosotros

mismos. Por ejemplo, hace años trabajé en un proyecto que tenía una base de datos que almacenaba objetos indexados

mediante un identificador que seguía el formato HHHHHHHH-V, donde H era un dígito hexadecimal y V era un número

de versión de uno o más dígitos.

Los programadores originales del sistema no se habían puesto de acuerdo en cómo tratar estos identificadores.

En algunas partes del programa, el identificador iba almacenado en una variable de tipo string. En otras partes,

el identificador estaba dividido en dos variables: una de tipo string que contenía los dígitos hexadecimales

y otra de tipo int que contenía el número de versión. Nuestro código muchas veces se parecía a esto:

public List<String> buscaIdsAsociados(String id) throws IllegalArgumentException, NotFoundException { if (!esValido(id)) { throw new IllegalArgumentException(); } String hexa = extraeHexa(id); int version = extraeVersion(id); Registro registro = buscaRegistro(hexa, version); List<String> idsAsociados = new ArrayList<>(); for (Registro asociado : registro.getRegistrosAsociados()) { idsAsociados.add(asociado.getHexa() + "-" + asociado.getVersion()); } return idsAsociados; } public Registro buscaRegistro(String hexa, int version) { Query query = createQuery("SELECT * FROM Registros WHERE hexa=?, version=?", hexa, version); return convertir(query.execute()); }

Como podéis ver, era un jaleo. Nos pasábamos el tiempo validando identificadores y dividiéndolos en dos partes y reensamblándolos y buscando errores causados por sitios donde nos habíamos olvidado de validar o donde habíamos pasado un identificador cuando necesitábamos la parte hexadecimal o viceversa.

La solución a este problema consistió en reconocer que un identificador es un nuevo tipo de entidad y no sólo una cadena de texto, y crear una clase dedicada a almacenar y manipular identificadores.

public class Identificador { private String hexa; private int version; private Identificador(String hexa, int version) { this.hexa = hexa; this.version = version; } public String getHexa() { return hexa; } public int getVersion() { return version; } public String toString() { return hexa + "-" + version; } public static Identificador crear(String id) throws IllegalArgumentException { // Validación y extracción de las partes del identificador ... String hexa = ...; int version = ...; return new Identificador(hexa, version); } }

Y, con esto, pudimos simplificar nuestro código, evitando las constantes validaciones y tratando los identificadores de la misma manera en todo el programa, eliminando cientos de líneas de código y oportunidades para introducir errores.

public List<Identificador> buscaIdsAsociados(Identificador id) throws NotFoundException { Registro registro = buscaRegistro(id); List<Identificador> idsAsociados = new ArrayList<>(); for (Registro asociado : registro.getRegistrosAsociados()) { idsAsociados.add(asociado.getIdentificador()); } return idsAsociados; } public Registro buscaRegistro(Identificador id) { Query query = createQuery("SELECT * FROM Registros WHERE hexa=?, version=?", identificador.getHexa(), identificador.getVersion()); return convertir(query.execute()); }

En el mundillo de las bases de datos hay dos escuelas: la escuela de los que utilizan INTs para las claves primarias

y la escuela de los que utilizan UUIDs. Sea cual sea la escuela a la que pertenezca vuestro DBA, vuestro código va a

acabar lleno de variables, todas del mismo tipo, que contienen claves primarias pertenecientes a distintas tablas.

long idArticulo = getLongParam("idArticulo"); long idComentario = getLongParam("idComentario"); Comentario comentario = cargaComentario(idArticulo, idComentario); long idUsuario = comentario.idAutor(); Usuario usuario = cargaUsuario(idArticulo); outputTemplate("comentario", usuario, comentario);

Este podría ser parte del código fuente de un CMS: una función que gestiona la respuesta a una operación HTTP GET

para mostrar un comentario de un artículo. Esta función recibe un identificador de artículo y de comentario,

carga el comentario y los datos del usuario que publicó el comentario, y los convierte a HTML para mostrarlos en el navegador.

La base de datos utiliza números enteros para las claves primarias, y el lenguaje las almacena en variables de tipo

long. Tenemos una clave para el artículo, otra para el comentario y otra para el usuario. Y como todas

estas claves van en variables del mismo tipo, es muy fácil confundirse y mezclarlas sin darse cuenta. De hecho,

el código de arriba contiene un error. ¿Cuánto tiempo os lleva descubrirlo?

Aunque todas las claves primarias van en variables del mismo tipo, no son intercambiables. Si pasamos un identificador de usuario a una función que espera un identificador de artículo, esta función nos dará un resultado incorrecto. Lo malo es que no nos daremos cuenta de esta confusión hasta que probemos el programa y nos demos cuenta del error; o, peor todavía, hasta que un usuario lo vea y nos avise. O, lo peor de todo, nunca nos daremos cuenta y terminaremos corrompiendo datos.

Si estas claves no son intercambiables en la práctica, tampoco deberían ser intercambiables en el código. Podemos lograr esto muy fácilmente usando clases distintas para las claves primarias de cada tabla.

IdArticulo idArticulo = new IdArticulo(getLongParam("idArticulo")); IdComentario idComentario = new IdComentario(getLongParam("idComentario")); Comentario comentario = cargaComentario(idArticulo, idComentario); IdUsuario idUsuario = comentario.idAutor(); Usuario usuario = cargaUsuario(idArticulo); // El compilador nos da un error aquí. outputTemplate("comentario", usuario, comentario);

Crear estas clases es superfácil en la mayoría de los lenguajes modernos. Por ejemplo, en Java, podemos crear una clase base que incorpore toda la funcionalidad, y después añadir una línea de código para cada tipo.

public abstract class Id { private long id; public Id(long id) { this.id = id; } public long getId() { return id; } public String toString() { return getClass().getSimpleName() + "=" + id; } } public IdArticulo extends Id { public IdArticulo(long id) { super(id); } } public IdComentario extends Id { public IdComentario(long id) { super(id); } } public IdUsuario extends Id { public IdUsuario(long id) { super(id); } }

Esta técnica también os va a resultar útil en tareas de procesamiento de datos, en las que podríamos estar manejando datos pertenecientes a distintas etapas de procesamiento al mismo tiempo sin querer mezclarlos. Hoy en día, os la encontraréis más habitualmente a la hora de evitar XSS en aplicaciones web.

Muchas aplicaciones web necesitan recibir una cadena de texto del usuario, procesarla de alguna manera, y finalmente mostrarla en una página web. Si no tenéis cuidado y pegáis ese texto directamente en el HTML, os expondréis a un ataque XSS; como mínimo, tenéis que escapar esa cadena de texto antes de ponerla en el HTML. A veces, los programadores de las aplicaciones web se confunden y escapan una cadena dos veces, y después los usuarios ven cosas raras como “técnica” en lugar de “técnica”. O hacen mal el escape de cadenas para SQL y después los usuarios ven “O\'Connell” en lugar de “O'Connell”.

Para evitar estos problemas, muchos frameworks web modernos no nos permiten pegar strings directamente,

sino que nos obligan a usar tipos especiales que contienen cadenas de texto ya escapadas. Podemos crear

una instancia de uno de esos tipos a partir de una cadena sin escapar, y a partir de entonces ya no

cabe confusión: dondequiera que utilicemos esa clase, es una cadena ya escapada y lista para insertar en

código HTML.

Es muy habitual utilizar ints y longs para representar intervalos de tiempo. La cuestión es: ¿en qué

unidad? El sistema operativo Unix utiliza segundos pero los lenguajes Java y JavaScript utilizan

milisegundos. Yo he trabajado con sistemas que utilizaban microsegundos y nanosegundos. Muchas veces es

necesario utilizar distintas unidades en distintos lugares de un mismo programa, dependiendo de quién

haya escrito el código o qué función reciba un determinado número.

El problema es que todos esos intervalos de tiempo se representan como un long, así sin más, sin marcar

las unidades empleadas de ninguna manera, así que es muy fácil pasar a una función un número de milisegundos

cuando espera segundos, o restar microsegundos de nanosegundos, u otras operaciones sin sentido que acabamos

realizando sin querer.

Podríamos caer en la tentación de intentar solucionar este problema creando un nuevo tipo para cada unidad.

Una clase Segundos almacenaría un intervalo medido en segundos, una clase Milisegundos almacenaría otro

intervalo medido en milisegundos, y así sucesivamente. De esta manera ya no podríamos mezclar distintas

unidades sin que el compilador se quejara.

El problema es que, muchas veces, necesitamos convertir entre unas unidades y otras; a veces tenemos una función que devuelve un intervalo medido en segundos que tenemos que pasar a otra función que espera milisegundos, así que necesitamos hacer una conversión. Otras veces, tenemos que combinar dos intervalos que pueden estar medidos en distintas unidades. Esto podríamos solucionando añadiendo funciones de conversión y de suma y de resta para cada par de unidades, pero con cuatro unidades, esto acabarían siendo cuarenta y cuatro funciones en total. Eso es un montón de funciones.

public class Segundos { public Milisegundos milisegundos() { return ... } public Microsegundos microsegundos() { return ... } public Nanosegundos nanosegundos() { return ... } public Segundos suma(Segundos otro) { return ... } public Segundos suma(Milisegundos otro) { return ... } public Segundos suma(Microsegundos otro) { return ... } public Segundos suma(Nanosegundos otro) { return ... } public Segundos resta(Segundos otro) { return ... } public Segundos resta(Milisegundos otro) { return ... } public Segundos resta(Microsegundos otro) { return ... } public Segundos resta(Nanosegundos otro) { return ... } } // Y otras tres veces igual para Milisegundos, Microsegundos y Nanosegundos

Francamente, esta opción no es sostenible. A poco que necesitemos añadir una o dos unidades u operaciones, tendremos que escribir una cantidad de código colosal y, para más inri, la mayor parte consistirá en operaciones de conversión.

La verdadera solución consiste en darse cuenta de que la entidad para la que estamos creando tipos de datos no es el número de segundos, milisegundos o microsegundos. La entidad es el intervalo de tiempo, y todas esas unidades no son más que maneras de medirlo. No necesitamos crear un tipo para cada unidad de tiempo; necesitamos crear un tipo para los intervalos de tiempo, que tenga las operaciones necesarias para poder expresar esos intervalos en las unidades adecuadas.

Por ejemplo, podríamos crear un tipo Intervalo que contenga una variable que expresa la longitud del intervalo

en una unidad cómoda, y que también contenga funciones para expresar ese intervalo en segundos, milisegundos,

microsegundos y nanosegundos, junto con funciones para hacer la conversión inversa.

public class Intervalo { private double valor; private Intervalo(double valor) { this.valor = valor; } // Constructores public static Intervalo deSegundos(long segundos) { return new Intervalo(segundos); } public static Intervalo deMilisegundos(long milisegundos) { return new Intervalo(milisegundos / 1e3); } public static Intervalo deMicrosegundos(long microsegundos) { return new Intervalo(microsegundos / 1e6); } public static Intervalo deNanosegundos(long nanosegundos) { return new Intervalo(nanosegundos / 1e9); } // Conversiones public long segundos() { return (long) valor; } public long milisegundos() { return (long) (valor * 1e3); } public long microsegundos() { return (long) (valor * 1e6); } public long nanosegundos() { return (long) (valor * 1e9); } // Combinaciones public Intervalo suma(Intervalo otro) { return new Intervalo(valor + otro.valor); } public Intervalo resta(Intervalo otro) { return new Intervalo(valor - otro.valor); } }

Y esto es todo lo que necesitamos para poder representar intervalos de tiempo medidos en varias unidades, y cada vez que añadimos una nueva unidad, sólo necesitamos añadir dos funciones.

Ahora podemos utilizar esta clase para sustituir en nuestro código todos esos longs expresados en unidades

indeterminadas, y así evitar todas las confusiones y oportunidades para errores que mencioné más arriba.

// Viejuno. // ¿En qué unidad está el _timeout_? ¿Segundos? ¿Milisegundos? ¿Semanas? Registro buscaRegistro(Identificador id, long timeout) { ... } // La última moda. Registro buscaRegistro(Identificador id, Intervalo timeout) { ... }

Muchos lenguajes de programación modernos incluyen una clase parecida a Intervalo en su biblioteca estándar.

Por ejemplo, Java tiene el paquete java.time, que proporciona la clase Duration (junto con otra clase,

llamada Instant, que representa un particular punto en el tiempo). El lenguaje C++, por su parte,

proporciona el namespace std::chrono, con sus clases duration y time_point. En otros lenguajes hay

bibliotecas de terceros que la proporcionan. Utilizadlas siempre que podáis; nunca uséis longs para

representar el tiempo.

A veces, utilizar un tipo primitivo del sistema es lo más sencillo pero puede acabar dándonos muchos

quebraderos de cabeza. Tenemos que pensar en si el número que almacenamos en ese long es realmente

un número o si lo que hay en ese string es una cadena de texto sin más, y crear y utilizar

nuevos tipos de datos cuando éstos tengan algún tipo de complicación o restricción o invariante.

Los humanos sabemos que no podemos sumar dos cerezas a tres naranjas, pero los ordenadores, si sólo ven

dos longs o dos doubles, los sumarán y dividirán sin pensárselo dos veces. Nosotros, los humanos

que sabemos que las entidades distintas pertenecen a tipos distintos, tenemos que comunicarle

esta distinción al ordenador, en forma de nuevos tipos de datos.

Y finalmente, nosotros sabemos que 60 segundos es lo mismo que un minuto, o que 5000 metros equivalen a 5 kilómetros; sin embargo, el ordenador sólo ve una variable que dice “60” y otra que dice “1”, o un valor “5000” y otro “5”. Depende de nosotros decirle al ordenador que ambas cosas son la misma.

La próxima vez que penséis en que estaría bien si pudiéseis “anotar” o “marcar” un número o una cadena de texto para tratarla especialmente, probad a crear y utilizar un nuevo tipo de datos. Al hacerlo, estoy seguro de que vuestros programas serán mucho más fiables y fáciles de leer y modificar.

el January 17, 2021 12:00 AM

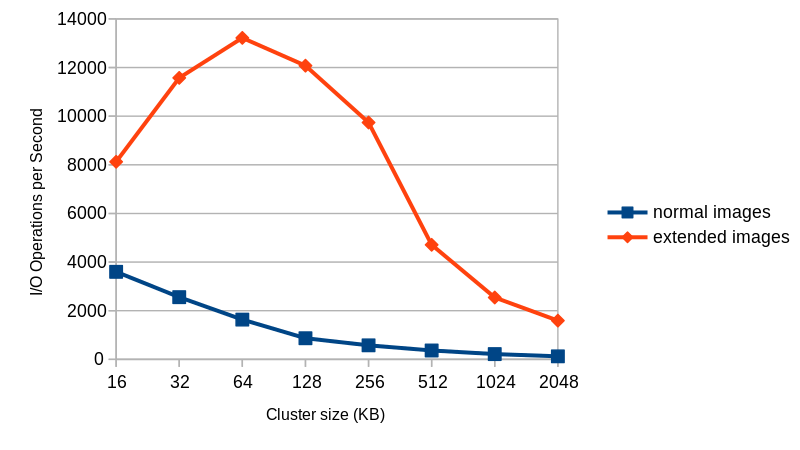

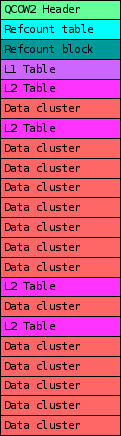

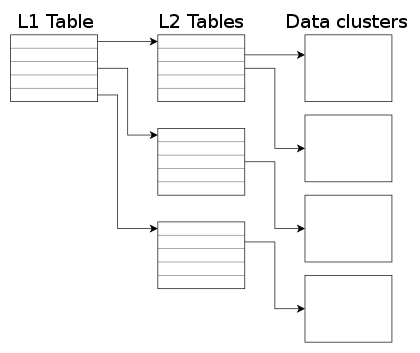

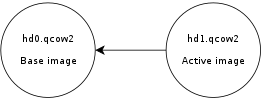

In previous blog posts I talked about QEMU’s qcow2 file format and how to make it faster. This post gives an overview of how the data is structured inside the image and how that affects performance, and this presentation at KVM Forum 2017 goes further into the topic.

This time I will talk about a new extension to the qcow2 format that seeks to improve its performance and reduce its memory requirements.

Let’s start by describing the problem.

One of the most important parameters when creating a new qcow2 image is the cluster size. Much like a filesystem’s block size, the qcow2 cluster size indicates the minimum unit of allocation. One difference however is that while filesystems tend to use small blocks (4 KB is a common size in ext4, ntfs or hfs+) the standard qcow2 cluster size is 64 KB. This adds some overhead because QEMU always needs to write complete clusters so it often ends up doing copy-on-write and writing to the qcow2 image more data than what the virtual machine requested. This gets worse if the image has a backing file because then QEMU needs to copy data from there, so a write request not only becomes larger but it also involves additional read requests from the backing file(s).

Because of that qcow2 images with larger cluster sizes tend to:

Unfortunately, reducing the cluster size is in general not an option because it also has an impact on the amount of metadata used internally by qcow2 (reference counts, guest-to-host cluster mapping). Decreasing the cluster size increases the number of clusters and the amount of necessary metadata. This has direct negative impact on I/O performance, which can be mitigated by caching it in RAM, therefore increasing the memory requirements (the aforementioned post covers this in more detail).

The problems described in the previous section are well-known consequences of the design of the qcow2 format and they have been discussed over the years.

I have been working on a way to improve the situation and the work is now finished and available in QEMU 5.2 as a new extension to the qcow2 format called extended L2 entries.

The so-called L2 tables are used to map guest addresses to data clusters. With extended L2 entries we can store more information about the status of each data cluster, and this allows us to have allocation at the subcluster level.

The basic idea is that data clusters are now divided into 32 subclusters of the same size, and each one of them can be allocated separately. This allows combining the benefits of larger cluster sizes (less metadata and RAM requirements) with the benefits of smaller units of allocation (less copy-on-write, smaller images). If the subcluster size matches the block size of the filesystem used inside the virtual machine then we can eliminate the need for copy-on-write entirely.

So with subcluster allocation we get:

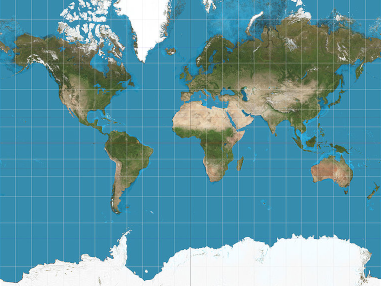

This figure shows the average number of I/O operations per second that I get with 4KB random write requests to an empty 40GB image with a fully populated backing file.

|

Things to take into account:

Extended L2 entries are available starting from QEMU 5.2. Due to the nature of the changes it is unlikely that this feature will be backported to an earlier version of QEMU.

In order to test this you simply need to create an image with extended_l2=on, and you also probably want to use a larger cluster size (the default is 64 KB, remember that every cluster has 32 subclusters). Here is an example:

$ qemu-img create -f qcow2 -o extended_l2=on,cluster_size=128k img.qcow2 1T

And that’s all you need to do. Once the image is created all allocations will happen at the subcluster level.

This work was presented at the 2020 edition of the KVM Forum. Here is the video recording of the presentation, where I cover all this in more detail:

You can also find the slides here.

This work has been possible thanks to Outscale, who have been sponsoring Igalia and my work in QEMU.

|

And thanks of course to the rest of the QEMU development team for their feedback and help with this!

por berto el December 03, 2020 06:15 PM

If you’re developing C/C++ on embedded devices, you might already have stumbled upon a corrupt stacktrace like this when trying to debug with gdb:

(gdb) bt #0 0xb38e32c4 in pthread_getname_np () from /home/enrique/buildroot/output5/staging/lib/libpthread.so.0 #1 0xb38e103c in __lll_timedlock_wait () from /home/enrique/buildroot/output5/staging/lib/libpthread.so.0 Backtrace stopped: previous frame identical to this frame (corrupt stack?)

In these cases I usually give up gdb and try to solve my problems by adding printf()s and resorting to other tools. However, there are times when you really really need to know what is in that cursed stack.

ARM devices subroutine calls work by setting the return address in the Link Register (LR), so the subroutine knows where to point the Program Counter (PC) register to. While not jumping into subroutines, the values of the LR register is saved in the stack (to be restored later, right before the current subroutine returns to the caller) and the register can be used for other tasks (LR is a “scratch register”). This means that the functions in the backtrace are actually there, in the stack, in the form of older saved LRs, waiting for us to get them.

So, the first step would be to dump the memory contents of the backtrace, starting from the address pointed by the Stack Pointer (SP). Let’s print the first 256 32-bit words and save them as a file from gdb:

(gdb) set logging overwrite on (gdb) set logging file /tmp/bt.txt (gdb) set logging on Copying output to /tmp/bt.txt. (gdb) x/256wa $sp 0xbe9772b0: 0x821e 0xb38e103d 0x1aef48 0xb1973df0 0xbe9772c0: 0x73d 0xb38dc51f 0x0 0x1 0xbe9772d0: 0x191d58 0x191da4 0x19f200 0xb31ae5ed ... 0xbe977560: 0xb28c6000 0xbe9776b4 0x5 0x10871 <main(int, char**)> 0xbe977570: 0xb6f93000 0xaaaaaaab 0xaf85fd4a 0xa36dbc17 0xbe977580: 0x130 0x0 0x109b9 <__libc_csu_init> 0x0 ... 0xbe977690: 0x0 0x0 0x108cd <_start> 0x0 0xbe9776a0: 0x0 0x108ed <_start+32> 0x10a19 <__libc_csu_fini> 0xb6f76969 (gdb) set logging off Done logging to /tmp/bt.txt.

Gdb already can name some of the functions (like main()), but not all of them. At least not the ones more interesting for our purpose. We’ll have to look for them by hand.

We first get the memory page mapping from the process (WebKit’s WebProcess in my case) looking in /proc/pid/maps. I’m retrieving it from the device (named metro) via ssh and saving it to a local file. I’m only interested in the code pages, those with executable (‘x’) permissions:

$ ssh metro 'cat /proc/$(ps axu | grep WebProcess | grep -v grep | { read _ P _ ; echo $P ; })/maps | grep " r.x. "' > /tmp/maps.txt

The file looks like this:

00010000-00011000 r-xp 00000000 103:04 2617 /usr/bin/WPEWebProcess ... b54f2000-b6e1e000 r-xp 00000000 103:04 1963 /usr/lib/libWPEWebKit-0.1.so.2.2.1 b6f6b000-b6f82000 r-xp 00000000 00:02 816 /lib/ld-2.24.so be957000-be978000 rwxp 00000000 00:00 0 [stack] be979000-be97a000 r-xp 00000000 00:00 0 [sigpage] be97b000-be97c000 r-xp 00000000 00:00 0 [vdso] ffff0000-ffff1000 r-xp 00000000 00:00 0 [vectors]

Now we process the backtrace to remove address markers and have one word per line:

$ cat /tmp/bt.txt | sed -e 's/^[^:]*://' -e 's/[<][^>]*[>]//g' | while read A B C D; do echo $A; echo $B; echo $C; echo $D; done | sed 's/^0x//' | while read P; do printf '%08x\n' "$((16#"$P"))"; done | sponge /tmp/bt.txt

Then merge and sort both files, so the addresses in the stack appear below their corresponding mappings:

$ cat /tmp/maps.txt /tmp/bt.txt | sort > /tmp/merged.txt

Now we process the resulting file to get each address in the stack with its corresponding mapping:

$ cat /tmp/merged.txt | while read LINE; do if [[ $LINE =~ - ]]; then MAPPING="$LINE"; else echo $LINE '-->' $MAPPING; fi; done | grep '/' | sed -E -e 's/([0-9a-f][0-9a-f]*)-([0-9a-f][0-9a-f]*)/\1 - \2/' > /tmp/mapped.txt

Like this (address in the stack, page start (or base), page end, page permissions, executable file load offset (base offset), etc.):

0001034c --> 00010000 - 00011000 r-xp 00000000 103:04 2617 /usr/bin/WPEWebProcess ... b550bfa4 --> b54f2000 - b6e1e000 r-xp 00000000 103:04 1963 /usr/lib/libWPEWebKit-0.1.so.2.2.1 b5937445 --> b54f2000 - b6e1e000 r-xp 00000000 103:04 1963 /usr/lib/libWPEWebKit-0.1.so.2.2.1 b5fb0319 --> b54f2000 - b6e1e000 r-xp 00000000 103:04 1963 /usr/lib/libWPEWebKit-0.1.so.2.2.1 ...

The addr2line tool can give us the exact function an address belongs to, or even the function and source code line if the code has been built with symbols. But the addresses addr2line understands are internal offsets, not absolute memory addresses. We can convert the addresses in the stack to offsets with this expression:

offset = address - page start + base offset

I’m using buildroot as my cross-build environment, so I need to pick the library files from the staging directory because those are the unstripped versions. The addr2line tool is the one from the buldroot cross compiling toolchain. Written as a script:

$ cat /tmp/mapped.txt | while read ADDR _ BASE _ END _ BASEOFFSET _ _ FILE; do OFFSET=$(printf "%08x\n" $((0x$ADDR - 0x$BASE + 0x$BASEOFFSET))); FILE=~/buildroot/output/staging/$FILE; if [[ -f $FILE ]]; then LINE=$(~/buildroot/output/host/usr/bin/arm-buildroot-linux-gnueabihf-addr2line -p -f -C -e $FILE $OFFSET); echo "$ADDR $LINE"; fi; done > /tmp/addr2line.txt

Finally, we filter out the useless [??] entries:

$ cat /tmp/bt.txt | while read DATA; do cat /tmp/addr2line.txt | grep "$DATA"; done | grep -v '[?][?]' > /tmp/fullbt.txt

What remains is something very similar to what the real backtrace should have been if everything had originally worked as it should in gdb:

b31ae5ed gst_pad_send_event_unchecked en /home/enrique/buildroot/output5/build/gstreamer1-1.10.4/gst/gstpad.c:5571

b31a46c1 gst_debug_log en /home/enrique/buildroot/output5/build/gstreamer1-1.10.4/gst/gstinfo.c:444

b31b7ead gst_pad_send_event en /home/enrique/buildroot/output5/build/gstreamer1-1.10.4/gst/gstpad.c:5775

b666250d WebCore::AppendPipeline::injectProtectionEventIfPending() en /home/enrique/buildroot/output5/build/wpewebkit-custom/build-Release/../Source/WebCore/platform/graphics/gstreamer/mse/AppendPipeline.cpp:1360

b657b411 WTF::GRefPtr<_GstEvent>::~GRefPtr() en /home/enrique/buildroot/output5/build/wpewebkit-custom/build-Release/DerivedSources/ForwardingHeaders/wtf/glib/GRefPtr.h:76

b5fb0319 WebCore::HTMLMediaElement::pendingActionTimerFired() en /home/enrique/buildroot/output5/build/wpewebkit-custom/build-Release/../Source/WebCore/html/HTMLMediaElement.cpp:1179

b61a524d WebCore::ThreadTimers::sharedTimerFiredInternal() en /home/enrique/buildroot/output5/build/wpewebkit-custom/build-Release/../Source/WebCore/platform/ThreadTimers.cpp:120

b61a5291 WTF::Function<void ()>::CallableWrapper<WebCore::ThreadTimers::setSharedTimer(WebCore::SharedTimer*)::{lambda()#1}>::call() en /home/enrique/buildroot/output5/build/wpewebkit-custom/build-Release/DerivedSources/ForwardingHeaders/wtf/Function.h:101

b6c809a3 operator() en /home/enrique/buildroot/output5/build/wpewebkit-custom/build-Release/../Source/WTF/wtf/glib/RunLoopGLib.cpp:171

b6c80991 WTF::RunLoop::TimerBase::TimerBase(WTF::RunLoop&)::{lambda(void*)#1}::_FUN(void*) en /home/enrique/buildroot/output5/build/wpewebkit-custom/build-Release/../Source/WTF/wtf/glib/RunLoopGLib.cpp:164

b6c80991 WTF::RunLoop::TimerBase::TimerBase(WTF::RunLoop&)::{lambda(void*)#1}::_FUN(void*) en /home/enrique/buildroot/output5/build/wpewebkit-custom/build-Release/../Source/WTF/wtf/glib/RunLoopGLib.cpp:164

b2ad4223 g_main_context_dispatch en :?

b6c80601 WTF::{lambda(_GSource*, int (*)(void*), void*)#1}::_FUN(_GSource*, int (*)(void*), void*) en /home/enrique/buildroot/output5/build/wpewebkit-custom/build-Release/../Source/WTF/wtf/glib/RunLoopGLib.cpp:40

b6c80991 WTF::RunLoop::TimerBase::TimerBase(WTF::RunLoop&)::{lambda(void*)#1}::_FUN(void*) en /home/enrique/buildroot/output5/build/wpewebkit-custom/build-Release/../Source/WTF/wtf/glib/RunLoopGLib.cpp:164

b6c80991 WTF::RunLoop::TimerBase::TimerBase(WTF::RunLoop&)::{lambda(void*)#1}::_FUN(void*) en /home/enrique/buildroot/output5/build/wpewebkit-custom/build-Release/../Source/WTF/wtf/glib/RunLoopGLib.cpp:164

b2adfc49 g_poll en :?

b2ad44b7 g_main_context_iterate.isra.29 en :?

b2ad477d g_main_loop_run en :?

b6c80de3 WTF::RunLoop::run() en /home/enrique/buildroot/output5/build/wpewebkit-custom/build-Release/../Source/WTF/wtf/glib/RunLoopGLib.cpp:97

b6c654ed WTF::RunLoop::dispatch(WTF::Function<void ()>&&) en /home/enrique/buildroot/output5/build/wpewebkit-custom/build-Release/../Source/WTF/wtf/RunLoop.cpp:128

b5937445 int WebKit::ChildProcessMain<WebKit::WebProcess, WebKit::WebProcessMain>(int, char**) en /home/enrique/buildroot/output5/build/wpewebkit-custom/build-Release/../Source/WebKit/Shared/unix/ChildProcessMain.h:64

b27b2978 __bss_start en :?

I hope you find this trick useful and the scripts handy in case you ever to resort to examining the raw stack to get a meaningful backtrace.

Happy debugging!

por eocanha el October 16, 2020 06:07 PM

At the end of the last year I wrote a long blog post summarizing the main work I was involved with as part of Igalia’s Chromium team. In it I mentioned that a big chunk of my time was spent working on the migration to the new C++ Mojo types across the entire codebase of Chromium, in the context of the Onion Soup 2.0 project.

For those of you who don’t know what Mojo is about, there is extensive information about it in Chromium’s documentation, but for the sake of this post, let’s simplify things and say that Mojo is a modern replacement to Chromium’s legacy IPC APIs which enables a better, simpler and more direct way of communication among all of Chromium’s different processes.

One interesting thing about this conversion is that, even though Mojo was already “the new thing” compared to Chromium’s legacy IPC APIs, the original Mojo API presented a few problems that could only be fixed with a newer API. This is the main reason that motivated this migration, since the new Mojo API fixed those issues by providing less confusing and less error-prone types, as well as additional checks that would force your code to be safer than before, and all this done in a binary compatible way. Please check out the Mojo Bindings Conversion Cheatsheet for more details on what exactly those conversions would be about.

Another interesting aspect of this conversion is that, unfortunately, it wouldn’t be as easy as running a “search & replace” operation since in most cases deeper changes would need to be done to make sure that the migration wouldn’t break neither existing tests nor production code. This is the reason why we often had to write bigger refactorings than what one would have anticipated for some of those migrations, or why sometimes some patches took a bit longer to get landed as they would span way too much across multiple directories, making the merging process extra challenging.

Now combine all this with the fact that we were confronted with about 5000 instances of the old types in the Chromium codebase when we started, spanning across nearly every single subdirectory of the project, and you’ll probably understand why this was a massive feat that would took quite some time to tackle.

Turns out, though, that after just 6 months since we started working on this and more than 1100 patches landed upstream, our team managed to have nearly all the existing uses of the old APIs migrated to the new ones, reaching to a point where, by the end of December 2019, we had completed 99.21% of the entire migration! That is, we basically had almost everything migrated back then and the only part we were missing was the migration of //components/arc, as I already announced in this blog back in December and in the chromium-mojo mailing list.

Progress of migrations to the new Mojo syntax by December 2019

This was good news indeed. But the fact that we didn’t manage to reach 100% was still a bit of a pain point because, as Kentaro Hara mentioned in the chromium-mojo mailing list yesterday, “finishing 100% is very important because refactoring projects that started but didn’t finish leave a lot of tech debt in the code base”. And surely we didn’t want to leave the project unfinished, so we kept collaborating with the Chromium community in order to finish the job.

The main problem with //components/arc was that, as explained in the bug where we tracked that particular subtask, we couldn’t migrate it yet because the external libchrome repository was still relying on the old types! Thus, even though almost nothing else in Chromium was using them at that point, migrating those .mojom files under //components/arc to the new types would basically break libchrome, which wouldn’t have a recent enough version of Mojo to understand them (and no, according to the people collaborating with us on this effort at that particular moment, getting Mojo updated to a new version in libchrome was not really a possibility).

So, in order to fix this situation, we collaborated closely with the people maintaining the libchrome repository (external to Chromium’s repository and still relies in the old mojo types) to get the remaining migration, inside //components/arc, unblocked. And after a few months doing some small changes here and there to provide the libchrome folks with the tools they’d need to allow them to proceed with the migration, they could finally integrate the necessary changes that would ultimately allow us to complete the task.

Once this important piece of the puzzle was in place, all that was left was for my colleague Abhijeet to land the CL that would migrate most of //components/arc to the new types (a CL which had been put on hold for about 6 months!), and then to land a few CLs more on top to make sure we did get rid of any trace of old types that might still be in codebase (special kudos to my colleague Gyuyoung, who wrote most of those final CLs).

Progress of migrations to the new Mojo syntax by July 2020

After all this effort, which would sit on top of all the amazing work that my team had already done in the second half of 2019, we finally reached the point where we are today, when we can proudly and loudly announce that the migration of the old C++ Mojo types to the new ones is finally complete! Please feel free to check out the details on the spreadsheet tracking this effort.

So please join me in celebrating this important milestone for the Chromium project and enjoy the new codebase free of the old Mojo types. It’s been difficult but it definitely pays off to see it completed, something which wouldn’t have been possible without all the people who contributed along the way with comments, patches, reviews and any other type of feedback. Thank you all!

Last, while the main topic of this post is to celebrate the unblocking of these last migrations we had left since December 2019, I’d like to finish acknowledging the work of all my colleagues from Igalia who worked along with me on this task since we started, one year ago. That is, Abhijeet, Antonio, Gyuyoung, Henrique, Julie and Shin.

Last, while the main topic of this post is to celebrate the unblocking of these last migrations we had left since December 2019, I’d like to finish acknowledging the work of all my colleagues from Igalia who worked along with me on this task since we started, one year ago. That is, Abhijeet, Antonio, Gyuyoung, Henrique, Julie and Shin.

Now if you’ll excuse me, we need to get back to working on the Onion Soup 2.0 project because we’re not done yet: at the moment we’re mostly focused on converting remote calls using Chromium’s legacy IPC to Mojo (see the status report by Dave Tapuska) and helping finish Onion Soup’ing the remaining directores under //content/renderer (see the status report by Kentaro Hara), so there’s no time to waste. But those migrations will be material for another post, of course.

por mario el July 08, 2020 08:55 AM

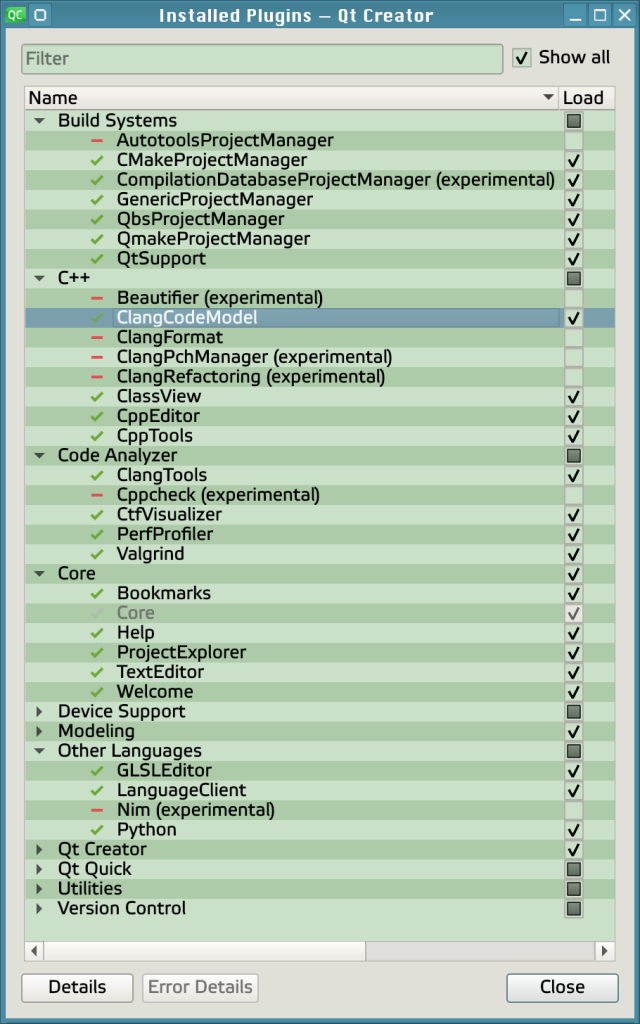

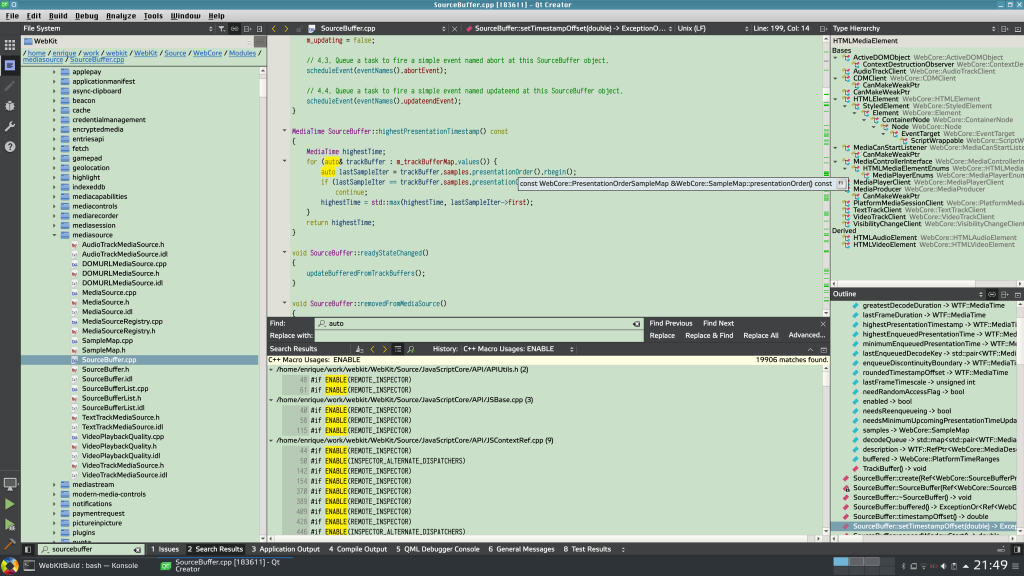

After the latest migration of WebKitGTK test bots to use the new SDK based on Flatpak, the old development environment based on jhbuild became deprecated. It can still be used with export WEBKIT_JHBUILD=1, though, but support for this way of working will gradually fade out.

I used to work on a chroot because I love the advantages of having an isolated and self-contained environment, but an issue in the way bubblewrap manages mountpoints basically made it impossible to use the new SDK from a chroot. It was time for me to update my development environment to the new ages and have it working in my main Kubuntu 18.04 distro.

My mail goal was to have a comfortable IDE that follows standard GUI conventions (that is, no emacs nor vim) and has code indexing features that (more or less) work with the WebKit codebase. Qt Creator was providing all that to me in the old chroot environment thanks to some configuration tricks by Alicia, so it should be good for the new one.

I preferred to use the Qt Creator 4.12.2 offline installer for Linux, so I can download exactly the same version in the future in case I need it, but other platforms and versions are also available.

The WebKit source code can be downloaded as always using git:

git clone git.webkit.org/WebKit.git

It’s useful to add WebKit/Tools/Scripts and WebKit/Tools/gtk to your PATH, as well as any other custom tools you may have. You can customize your $HOME/.bashrc for that, but I prefer to have an env.sh environment script to be sourced from the current shell when I want to enter into my development environment (by running webkit). If you’re going to use it too, remember to adjust to your needs the paths used there.

Even if you have a pretty recent distro, it’s still interesting to have the latests Flatpak tools. Add Alex Larsson’s PPA to your apt sources:

sudo add-apt-repository ppa:alexlarsson/flatpak